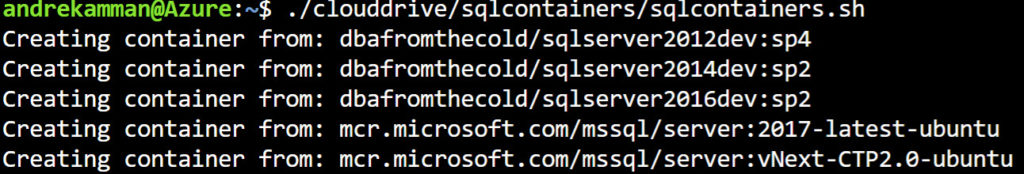

Every now and then (actually, quite often now I think of it) I need to test code on a bunch of different versions of SQL Server. And I prefer those SQL Server instances to be fresh every time. Quickest way that works for me, fire up my Azure Cloud Shell and start a script. The script creates all containers asynchronously, takes about 15 minutes because it needs to pull them.

First things first, make sure you have your Azure Shell setup.

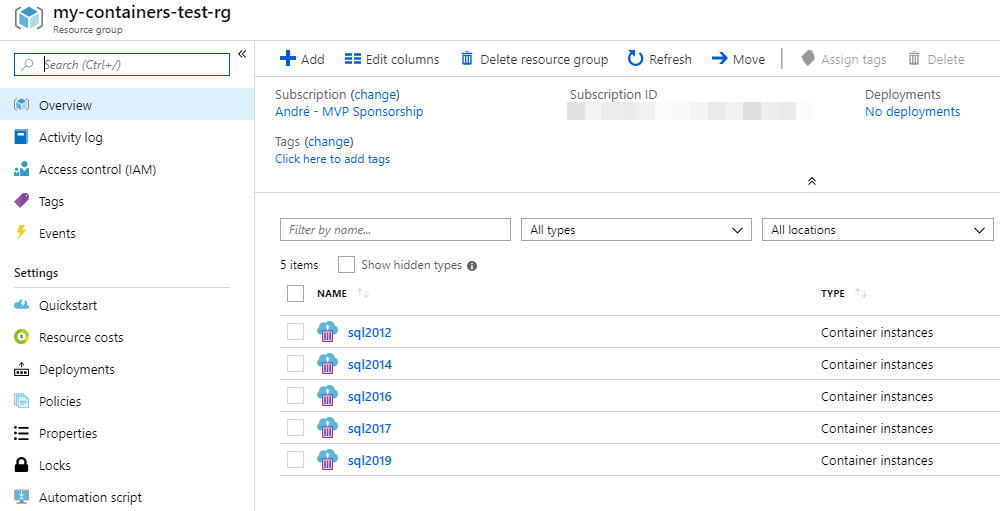

Then make sure you an empty resource group.

My little solution consists of 3 files:

sqlcontainers.config, which holds the name of the resource group where the containers should land, the dns-suffix that my container names will have to make sure they are unique, and the sa password that they will have.

resource_group=my-containers-test-rg dnssuffix=mygeneratedsuffix sapwd="mygeneratedpassword"

Tip: Generate the suffix and the sa password with a password manager, don’t use known simple passwords and / or pre-fixes that have a meaning and would be perhaps easy to guess. These SQL instances will available on the internet and accessible by anyone if you don’t choose a complex password. Also, don’t use them to store sensitive data, this should be used for testing purposes only!

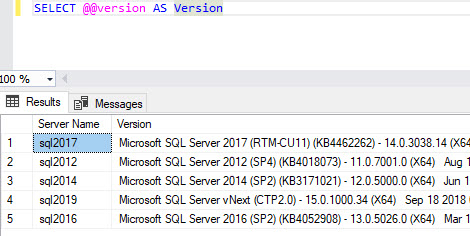

sqlcontainers.csv, they contain a comma separated list of the publicly available container images with SQL Server that I’m using, the name that they should have (which will also get the suffix that’s in the config file) and the operating system type. (windows or linux)

dbafromthecold/sqlserver2012dev:sp4,sql2012,windows dbafromthecold/sqlserver2014dev:sp2,sql2014,windows dbafromthecold/sqlserver2016dev:sp2,sql2016,windows mcr.microsoft.com/mssql/server:2017-latest-ubuntu,sql2017,linux mcr.microsoft.com/mssql/server:vNext-CTP2.0-ubuntu,sql2019,linux

Microsoft provides us with the (linux) containers for sql2017 and 2019. The older version containers have been created by Andrew Pruski (b/t). Andrew blogs a lot about containers and SQL Server, do take a look if you want to know more about it!

sqlcontainers.sh. The bash shell file that loads the config parameters and then loops through the items in the csv file to create containers.

#!/bin/bash

. ${BASH_SOURCE%/*}/sqlcontainers.config

while IFS=, read image name ostype

do

dnsname="${name}-${dnssuffix}"

command="az container create --resource-group ${resource_group} --image ${image} --name ${name} --ports 1433 --dns-name-label ${dnsname} --os-type ${ostype} --environment-variables ACCEPT_EULA=Y SA_PASSWORD=${sapwd}"

echo "Creating container from: ${image}"

$command &>/dev/null &

done < ${BASH_SOURCE%/*}/sqlcontainers.csv

For us SQL Server people, writing a bash script is not something we do everyday, I’m therefore not ashamed in admitting that I’ve googled quite a bit before I figured all the moving parts 😉 It basically loads the config parameters, then the while loop loops through the items in the csv file and creates the containers. (the “&/dev/null &” part makes sure it happens in the background and asynchronously)

Just create a new directory in the cloud portal and upload your files. (If you created them in a windows editor make sure they have linux line endings and not the windows CR/LF, the cloud shell does not like that and will give a cryptical error message)

Then all you need to do is start the script and wait around 15 minutes for the containers to be downloaded and installed.

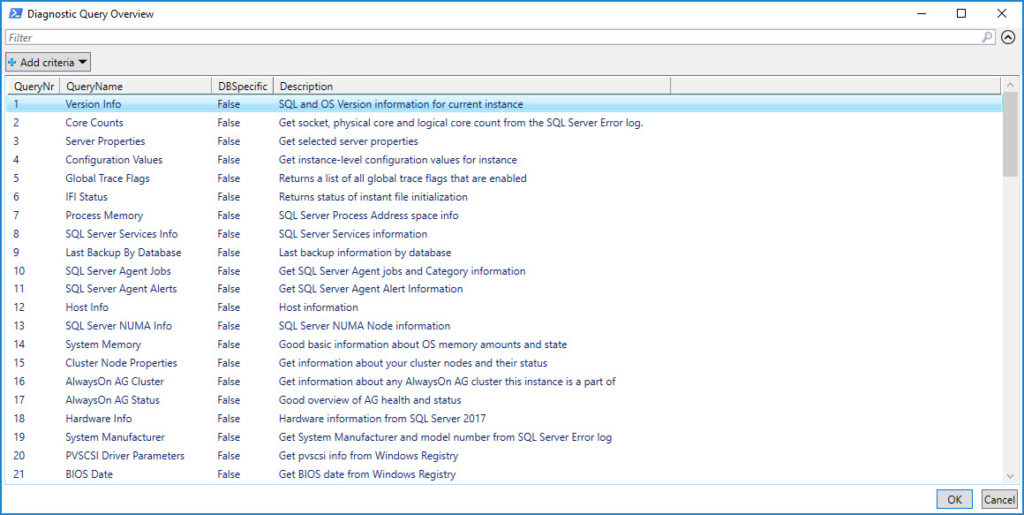

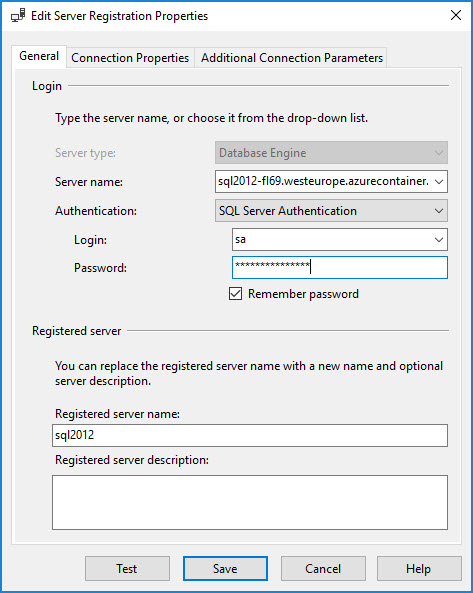

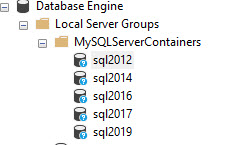

After that, a good idea would be to create registered servers so you can use them easily from Management Studio

1 CommentAzure Container Instances are more expensive than running a vm with the same resources, make sure you delete the containers when you’re done!