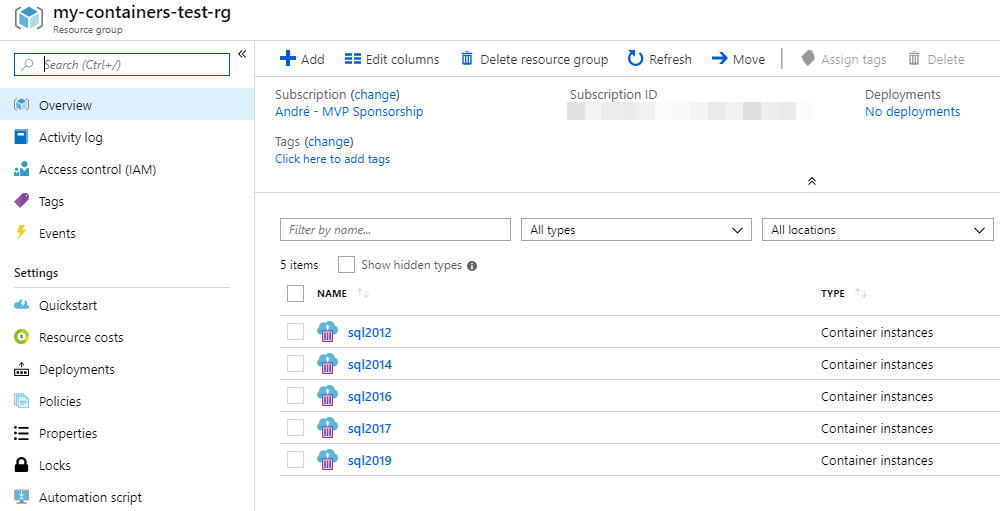

Every now and then (actually, quite often now I think of it) I need to test code on a bunch of different versions of SQL Server. And I prefer those SQL Server instances to be fresh every time. Quickest way that works for me, fire up my Azure Cloud...

Gary Bargsley (b|t) is the host of this months T-SQL Tuesday #110 – ” Automate All the Things” The question he asks is easy: What do you want to automate or what automation are you proud of completing? My answer to that is simple...

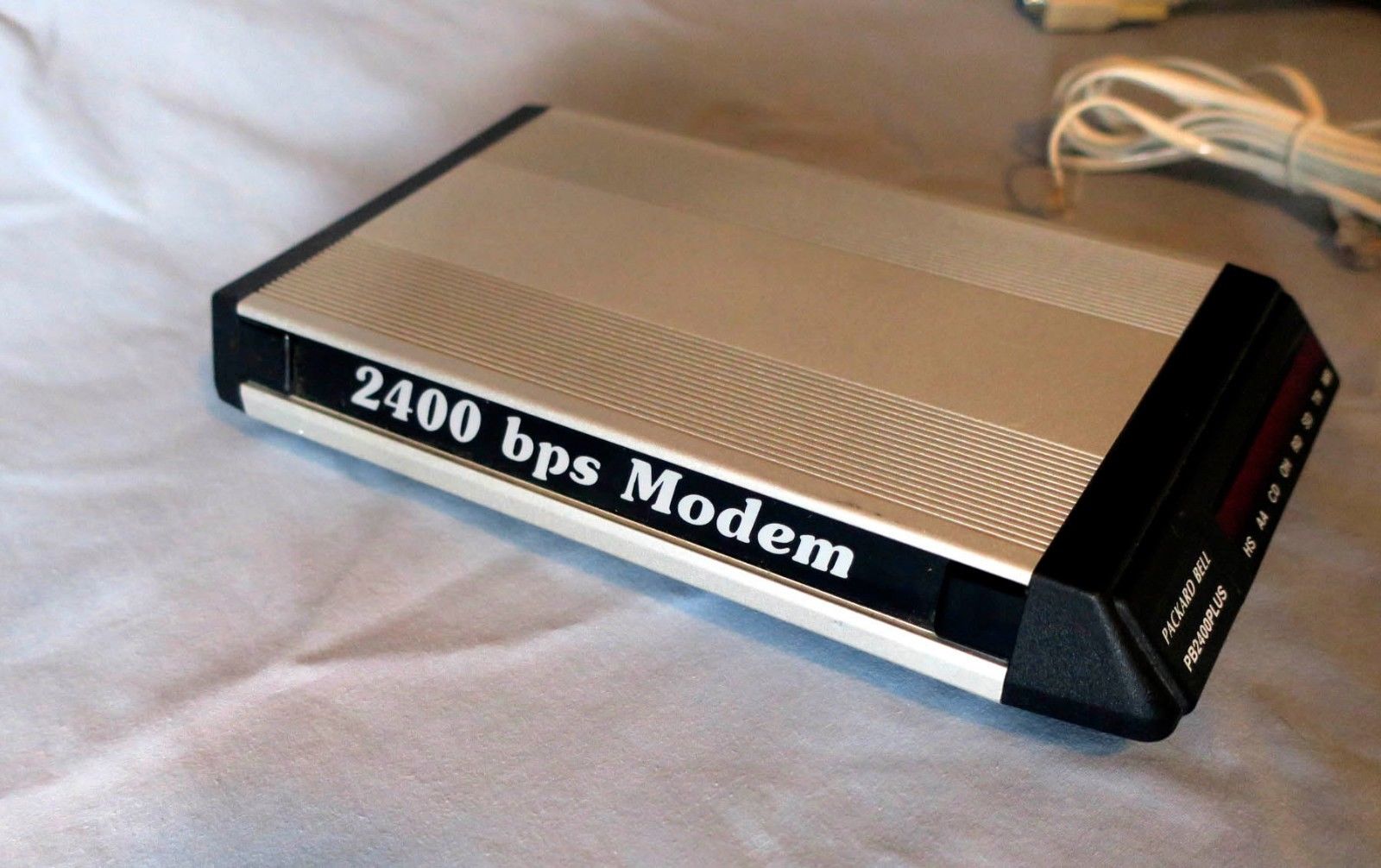

January 1st, 1989 was my first day at work for my first job in IT. You would think that everything was completely different 30 years ago. The advance in sheer computing power and the evolution of languages and frameworks had me think for a bit that...